Driver's License TestS: A Tale of Regulatory Philosophies (And Now I Can't Unsee the Pattern)

Or: How a dense legal paper sent me down memory lane and made global tech policy suddenly click

I was deep into a journal article published early this year by Pang Cheng Kit on comparative analysis of global AI regulation—when a sentence get me thinking…

“China has adopted what researchers call an ‘agile and iterative’ approach: vertical, sector-specific regulations that respond to emerging concerns as they arise, with strategic ambiguity that gives regulators discretion...”

The paper, published in the Singapore Academy of Law Journal in March 2025, compares how the EU, China, and South Korea are racing to regulate artificial intelligence. It's a sober, methodical analysis of legislative frameworks, risk-based approaches, and enforcement mechanisms. But as I read through the descriptions of these different regulatory philosophies, the frameworks felt... familiar. Not intellectually familiar—viscerally familiar. Like I'd lived these different approaches somehow.

Then it clicked: my driving licenses tests!

My driving tests: USA vs Chennai, India

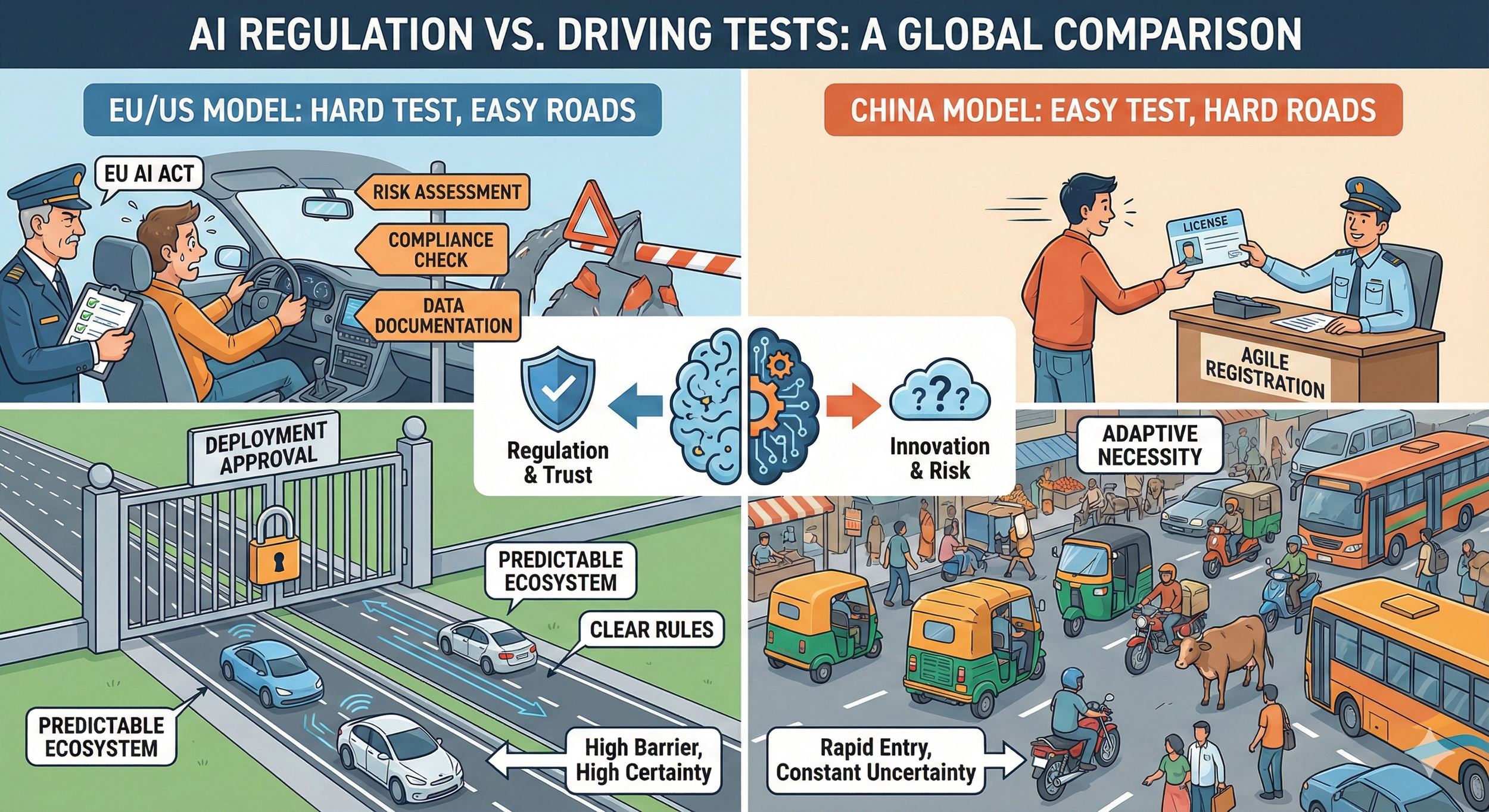

Picture generated by Nano Banana Pro

Back to the Paper: The Pattern Clicks

So there I was, coffee-fueled and nostalgic, when it hit me:

The EU's approach to AI regulation is basically my US driving test. China's approach is basically my Chennai license.

The entire paper suddenly rearranged itself in my mind.

The EU: Maximum Scrutiny at the Gate

The EU AI Act (entered into force August 2024, phased enforcement beginning) embodies the rigorous-entry approach:

Before you deploy (that is.. before you drive):

Categorize your AI system by risk level

High-risk systems need conformity assessments (pass the examiner's checklist)

Document everything obsessively (prove you checked every blind spot)

Meet technical requirements for transparency, data quality, human oversight

Once you're approved:

The ecosystem is predictable

Clear rules govern operation

Heavy penalties for violations (€35M or 7% of global turnover)

But you have certainty about expectations

The trade-off: High barrier to entry. SMEs struggle with compliance costs—just like I nearly gave up after test four. But consumer trust should be higher, and the market safer. It's my US driving test scaled to continental policy: filter rigorously at the gate, create predictable roads inside.

China: Deploy First, Filter Through Practice

China's "agile and iterative" approach targets specific AI applications as concerns emerge—algorithm recommendations, then deepfakes, then generative AI:

Getting started:

Relatively easy initial entry

Basic registration (drive forward, reverse, park)

Algorithm registry for systems with "public opinion properties"

Operating in practice:

Rules emerge based on what problems arise

Strategic ambiguity ("uphold core socialist values") requires constant reading of regulatory signals

Enforcement is discretionary and can be sudden

Companies that can't adapt get filtered out

Survivors develop a sixth sense for regulatory winds

The trade-off: Rapid innovation and deployment. But persistent uncertainty—you're never sure if your interpretation holds up. And the government maintains control through discretionary enforcement. Pure Chennai methodology: minimal gatekeeping, continuous examination through practice.

South Korea: The Middle Path

South Korea's AI Basic Act (passed December 2024, effective January 2026) watched both models and created a mix

From the EU model:

Risk-based categorization

Transparency requirements

Safety obligations

From the China model:

Lower penalties (₩30M vs EU's €35M)

More "endeavor to" language than mandates

Quick passage to iterate later

Plus uniquely Korean:

Explicit AI development support in the same Act

Focus on becoming an "AI hub"

Active civil society pushing for stronger protections

It's like someone watched me fail the US test repeatedly and drive Chennai successfully, then designed a learner's permit system: test enough for baseline safety, keep barriers low for experimentation.

What My Licenses Taught Me About Regulatory Philosophy

Lesson 1: The Test Shapes the Operator

US testing made me cautious, rule-following, documentation-obsessed. Chennai made me adaptive, constantly scanning, comfortable with ambiguity. Neither is universally superior, They're just optimized for different contexts.

For AI: The EU produces companies obsessed with conformity assessment. China produces companies skilled at reading signals and pivoting. Both are real capabilities—just different ones.

Lesson 2: The Environment Matters More Than Entry

Today I can drive in both US and Chennai traffic, but I use completely different skills. The licensing requirement mattered less than learning to read the environment.

For AI: The question isn't just frameworks—it's the broader ecosystem. Do you have enforcement capacity? Civil society advocacy? Public trust in institutions? Cultural attitudes toward rules versus adaptation?

Lesson 3: You Need Both Skill Sets

When I get a chance to drive in the US now, I use defensive awareness learned in Chennai. In Chennai, I try to mirror discipline learned in the US (but, havent found an opportunity yet!)

For AI: The future probably isn't pure EU or pure China. It's:

Rigorous assessment for high-risk systems (US-style)

Plus flexibility to adapt as technology evolves (Chennai-style)

Clear rules where we have consensus (US-style)

Plus discretion for emerging issues (Chennai-style)

The Meta-Question

Perhaps this is why the legal paper about AI send me spiraling into driving memories, because both involve:

Complex systems we don't fully understand

Attempts to impose order through rules

Tension between control and adaptation

Questions about who gets filtered out

High stakes if we get it wrong

Fundamental uncertainty about which approach produces better outcomes

Maybe asking "which regulatory approach is better?" is like asking "which license made you a better driver?"

Wrong question.

Better question: "What are you optimizing for, in what context, and which approach serves those goals?"

The global AI regulatory landscape is still being mapped. The EU has drawn clear lanes and installed traffic lights. China is managing flow with discretionary direction. South Korea is marking wide lanes. The US is still debating whether we need lanes at all.

Singapore is watching, taking notes, and building the testing facility where others can practice.

P.S. If you found this useful, share it with someone trying to understand the global AI regulation landscape. And if you want to actually see these frameworks side-by-side—including Singapore's Model AI Governance Framework, the EU AI Act, and dozens more—check out RegXperience.