Risk, Regulations & Relationships: Visualizing AI Governance with Graphs

When we launched RegXperience at the Singapore FinTech Festival 2025, we weren't just unveiling another compliance tool. We were introducing a platform designed to streamline governance, risk, and compliance across multiple domains—with AI governance emerging as one of the most critical and complex themes we're addressing. This post shares the journey that led us to rethink how AI governance actually works—and why traditional approaches fall short.

As AI solution providers, building trust with clients has always been central to our work. In the machine learning era, assurance was straightforward. We could demonstrate safety and reliability with familiar metrics: RMSE, accuracy scores, F1 measures. These numbers told a clear story.

Then generative AI, aka GenAI changed everything.

Suddenly, the questions shifted: How do you measure the "accuracy" of generated text? How do you quantify the risk of a hallucination? How do you test for bias in a model trained on the entire internet? Our existing evaluations were no longer sufficient. And critically, we were no longer just dealing with AI models—we were managing entire AI systems with complex workflows, data pipelines, interfaces, and human oversight layers.

We had to go back to first principles.

The Governance Labyrinth

Our approach was to understand risk management by consolidating best practices from global sources and applying them to the GenAI era. What we discovered was a fragmented landscape of overlapping terminology:

Risk Management Frameworks like NIST AI RMF contain internal controls

Global Standards like ISO/IEC 42001 and ISO 23894 provide clauses, controls, and implementation guidance

Regional Regulations like the EU AI Act and Canada's AIDA add legal requirements

The real challenge wasn't the artifacts themselves—it was their subjectivity and interconnections. A "control" for a legal team was a "requirement" for an engineering team. A single clause in the EU AI Act could map to dozens of internal controls.

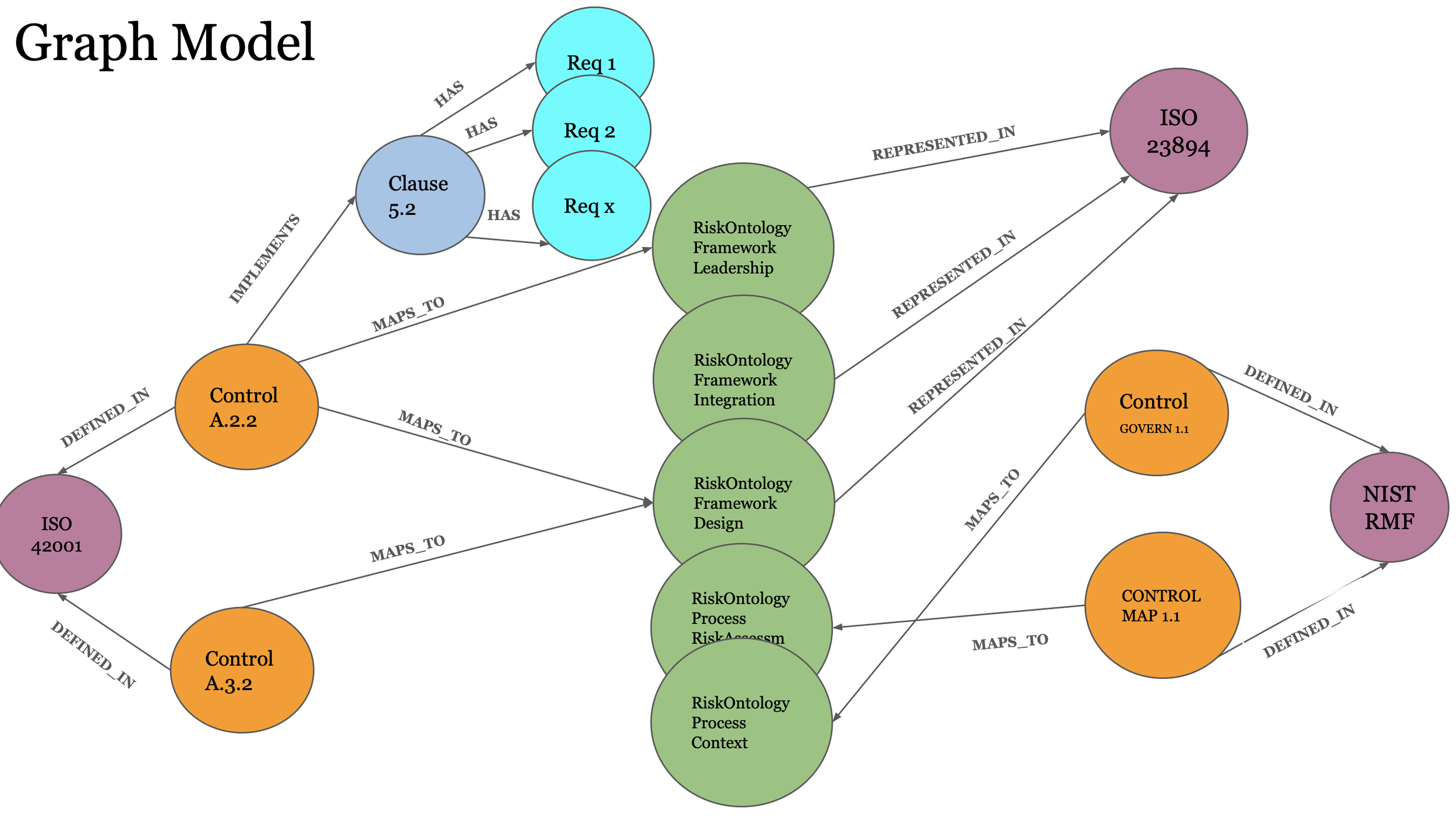

This couldn't be solved with a traditional crosswalk. It needed to be a graph.

Finding Our Common Language: Why ISO 23894 Matters

The industry norm to compare standards was to create a crosswalk - a static, two-dimensional table that maps items from one standard to another. It's certainly useful but limited, We wanted to build a graph that was dynamic and relational to visualize the complex, many-to-many relationships with context-rich edges. It provides a holistic view that reveals hidden dependencies and scales easily when new regulations or policies emerge.

To build our graph, we needed a common vocabulary for AI risk. We found it in ISO 23894, which provides formal definitions of AI risk concepts. This became the backbone of our knowledge graph. The ISO 23894 ontology is built on two primary concepts:

Framework (The Structure) — The permanent, structural components of your governance system:

Leadership: Who is accountable?

Integration: How is risk management embedded across the organization?

Design & Implementation: How do we build and operate the system?

Evaluation & Improvement: How do we measure effectiveness and evolve?

Process (The Actions) — The repeatable, cyclical actions within that framework:

Communication: How do we share risk information?

Context: What's the scope and what external factors affect us?

Risk Assessment: How do we identify, analyze, and evaluate risks?

Risk Treatment: What do we do about identified risks?

Monitoring & Reporting: How do we track and document our progress?

This ontology gave us a shared risk language that could translate between different frameworks and regulations.

With ISO 23894 as our translator, we systematically connected the dots:

Deconstructed ISO 42001: We extracted every clause and control, breaking them down into individual requirements and mapping the relationships between them

Extracted NIST AI RMF controls: We pulled guidance from each function (GOVERN, MAP, MEASURE, MANAGE)

Applied the ontology: Each control from both frameworks was mapped to the relevant ISO 23894 category (e.g., Process_RiskAssessment, Framework_Leadership)

The result? A unified AI governance graph that connects standards, frameworks, and regulations through a shared vocabulary.

What the Graph Reveals

With our graph model in place, we could finally ask questions that were previously impossible to answer:

Which ISO clause is connected to the most controls? This reveals the most foundational requirements

Which risks have the most control coverage? This shows where frameworks overlap and where gaps exist

How does control coverage compare across frameworks? On average, ISO 42001 controls linked to an average of 1.71 ontology edges, while NIST RMF controls mapping to 1.96 ontology edges —indicating NIST's slightly broader coverage

Which AI principles have the most/least support? This exposes which ethical principles are well-addressed and which need more attention

These insights are a helpful link step forward for GRC professionals transitioning from traditional risk management to AI risk management. If you are someone transitioning from traditional risk management to AI risk management, here's what matters:

AI governance is fundamentally different: Traditional metrics don't work for generative AI. You're managing systems, not just models

A shared ontology is essential: Standards like ISO 23894 provide the common language needed to navigate fragmented regulations

Think in graphs, not tables: Static crosswalks can't capture the dynamic, interconnected nature of AI risk

Context is everything: Your compliance requirements depend on whether you're a provider, consumer, or end-user

The landscape will keep evolving: Choose tools and approaches that are scalable and adaptable

From Theory to Practice: RegXperience

This research wasn't just academic. It formed the foundation of RegXperience, our platform designed to help GRC consultants and growing businesses streamline their compliance processes including compliances for AI systems. RegXperience currently incorporates multiple AI risk management workflows including NIST AI RMF and other frameworks, translating the complex graph relationships we've mapped into actionable compliance pathways.

AI governance doesn't have to be a labyrinth. With the right framework—and the right way of visualizing relationships—it becomes navigable, even strategic. At DeepDive Labs, we're committed to making AI risk management accessible, actionable, and adaptable. Check our our 3-part informational series on youtube here. Whether you're a GRC consultant helping clients navigate compliance or a growing business trying to stay ahead of regulations, the graph-based approach offers a path from fragmentation to clarity.

Want to explore how graph-based AI governance can transform your compliance processes? Follow us on LinkedIn for regular updates on AI risk management.